6 minutes

3/4 Implementing User Stories with AI-Powered Code Generation

In the previous article, we demonstrated how Project Copilot can take a grooming call transcript and technical documentation to create a fully detailed backlog in Jira. Today, we’re taking the next logical step: implementing one of those user stories using AI-powered coding tools.

This article focuses on implementing User Story 1 from our backlog using Cursor, an AI-driven code generation tool. We’ll show you how you can prompt Cursor to generate fully functional code with just a simple natural language command.

Step 1: Recap of User Story 1

For those who might need a quick refresher, here’s what User Story 1 entails:

User Story 1: Voice Capture for Screenshot Descriptions

As a user, I want to use voice input to describe the screenshot of the active Chrome tab so that the description can be saved as a text file along with the screenshot.

Key Acceptance Criteria:

- Implement voice input using the Web Speech API.

- Display a text preview of the transcription.

- Allow the user to save both the screenshot and the transcribed text as separate files.

Step 2: Using Cursor for Code Generation

To implement User Story 1, we’ll leverage Cursor’s natural language processing capabilities to generate the necessary code. Here’s how simple it is:

Command in Cursor chat:

@manifest.json @popup.html @background.js @popup.js @tech-reference.md @userstory-01.md

Please based on the attached code and document implement User Story 01.

By attaching the files related to the Chrome extension project—manifest, HTML, JavaScript files, and the technical reference—along with the user story, we provide Cursor with all the context it needs to generate the implementation.

Step 3: Watch the Magic Happen

Cursor quickly processes the command, analyzes the attached files, and within seconds, it generates the code necessary to implement the voice capture functionality. The output includes all the components needed to fulfill the acceptance criteria of User Story 1.

Cursor output

Certainly! I’ll implement the voice capture feature based on the user story and the existing code. I’ll make changes to the necessary files to add this functionality.

Let’s start with the changes:

{

"manifest_version": 3,

"name": "Screenshot Enhancer",

"version": "1.0",

"description": "Capture and enhance screenshots with voice input",

"permissions": [

"activeTab",

"tabs",

"storage"

],

"host_permissions": [

"<all_urls>"

],

"action": {

"default_popup": "popup.html"

},

"background": {

"service_worker": "background.js"

},

"content_security_policy": {

"extension_pages": "script-src 'self'; object-src 'self'"

}

}

Next, let’s update the popup.html file:

<!DOCTYPE html>

<html lang="en">

<head>

<!-- ... existing head content ... -->

<style>

/* ... existing styles ... */

#voiceCaptureBtn {

background-color: #2196F3;

margin-top: 10px;

}

#voiceCaptureBtn:hover {

background-color: #0b7dda;

}

#voiceCaptureBtn.recording {

background-color: #f44336;

}

#voiceOutput {

margin-top: 10px;

padding: 10px;

border: 1px solid #ccc;

min-height: 50px;

}

</style>

</head>

<body>

<!-- ... existing content ... -->

<button id="voiceCaptureBtn">Start Voice Capture</button>

<div id="voiceOutput"></div>

<!-- ... existing content ... -->

<script src="popup.js"></script>

</body>

</html>

Now, let’s update the popup.js file to implement the voice capture functionality:

// ... existing code ...

// Add these functions at the beginning of the file

function checkSpeechRecognitionSupport() {

return 'webkitSpeechRecognition' in window || 'SpeechRecognition' in window;

}

function createSpeechRecognitionObject() {

return new (window.webkitSpeechRecognition || window.SpeechRecognition)();

}

// Add this to your existing DOMContentLoaded event listener

document.addEventListener('DOMContentLoaded', function() {

// ... existing code ...

const voiceCaptureBtn = document.getElementById('voiceCaptureBtn');

const voiceOutput = document.getElementById('voiceOutput');

if (checkSpeechRecognitionSupport()) {

const recognition = createSpeechRecognitionObject();

recognition.continuous = true;

recognition.interimResults = true;

recognition.lang = 'en-US';

let isRecording = false;

voiceCaptureBtn.addEventListener('click', () => {

if (!isRecording) {

recognition.start();

voiceCaptureBtn.textContent = 'Stop Voice Capture';

voiceCaptureBtn.classList.add('recording');

} else {

recognition.stop();

voiceCaptureBtn.textContent = 'Start Voice Capture';

voiceCaptureBtn.classList.remove('recording');

}

isRecording = !isRecording;

});

recognition.onresult = (event) => {

let interimTranscript = '';

let finalTranscript = '';

for (let i = event.resultIndex; i < event.results.length; ++i) {

if (event.results[i].isFinal) {

finalTranscript += event.results[i][0].transcript;

} else {

interimTranscript += event.results[i][0].transcript;

}

}

voiceOutput.innerHTML = finalTranscript + '<i style="color: #999;">' + interimTranscript + '</i>';

};

recognition.onerror = (event) => {

console.error('Speech recognition error:', event.error);

voiceOutput.textContent = 'Error: ' + event.error;

};

recognition.onend = () => {

isRecording = false;

voiceCaptureBtn.textContent = 'Start Voice Capture';

voiceCaptureBtn.classList.remove('recording');

};

} else {

voiceCaptureBtn.disabled = true;

voiceCaptureBtn.textContent = 'Voice Capture Not Supported';

voiceOutput.textContent = 'Your browser does not support the Web Speech API. Please use a compatible browser like Chrome.';

}

});

// ... rest of the existing code ...

These changes implement the voice capture feature as described in the user story. Here’s a summary of the updates:

- Added the “storage” permission to the manifest file for potential future use.

- Updated the extension description in the manifest.

- Added a new button and output div in the popup.html file.

- Implemented the Web Speech API integration in popup.js, including:

- Browser support check

- SpeechRecognition object creation

- Button click handler to start/stop recording

- Event handlers for speech recognition results, errors, and end of speech

- Visual feedback for the recording state

These changes satisfy the acceptance criteria mentioned in the user story:

- A clearly labeled button is added to activate voice recording.

- The Web Speech API starts recording when the button is clicked.

- Visual feedback is provided through button text changes and CSS classes.

- Error handling is implemented for unsupported browsers and recognition errors.

- The application checks for browser support and handles cases where the feature is not available.

The implementation allows users to start and stop voice recording, see the transcribed text in real-time, and provides appropriate feedback and error handling.

Step 4: Testing the Generated Code

After generating the code, we tested the functionality directly within the Chrome extension. The implementation worked seamlessly, allowing users to capture voice input, preview the transcription, and save both the screenshot and transcription as files.

Here’s a video demonstrating how the implementation works:

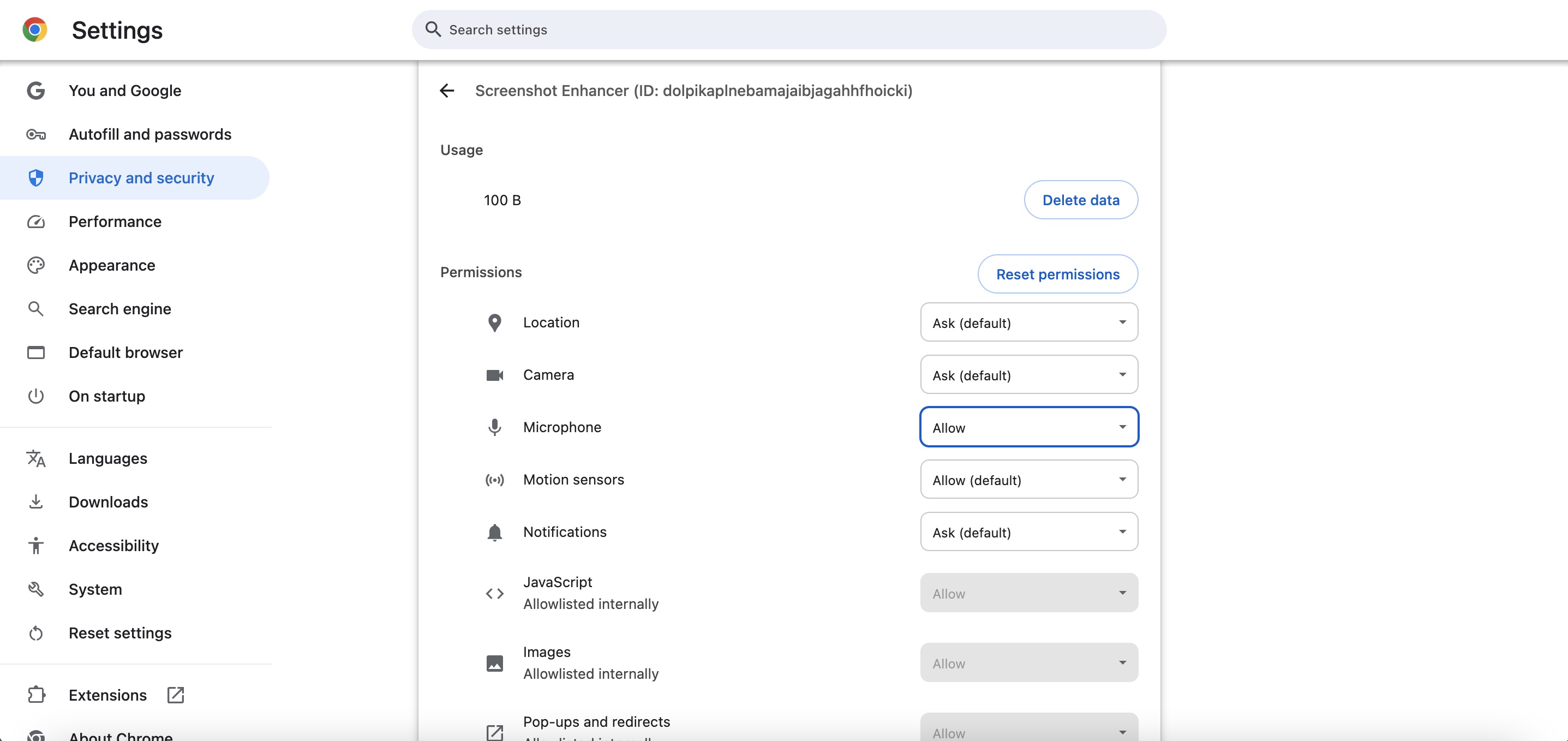

And here’s a screenshot showing the permission request to use the microphone in the Chrome extension:

Step 5: Homework – Implementing User Stories 2 and 3

Following the same approach, you can implement User Story 2 and User Story 3. Just attach the relevant files and prompt Cursor with a simple command to generate the code. This task is left as homework for you to try on your own.

Conclusion: Implementing with AI

As you can see, AI tools like Cursor can drastically reduce the time and effort required to implement user stories, even for complex features like voice capture. Our knowledge of code is mainly utilized to understand and refine what Cursor generates, not to write the code ourselves.

By integrating tools like Project Copilot and Cursor into your workflow, you can streamline every step of the software development process, from backlog creation to code implementation. This approach not only accelerates development but also ensures that your projects stay aligned with Agile principles.

What’s Next?

Stay tuned as we continue our journey into AI-driven software development, pushing the boundaries of what’s possible with these powerful tools.

Matías Molinas

CTO, Project Copilot

1126 Words

2024-08-28 00:00